Data driven model adaptation for identifying stochastic digital twins of bridges

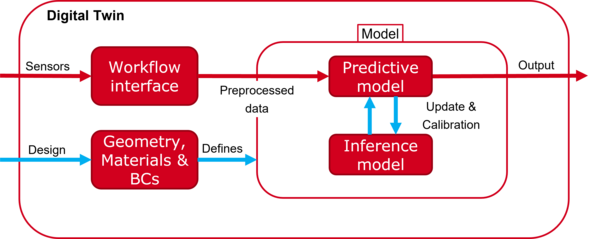

Simulation based techniques are the standard for the design of bridges, primarily related to the mechanical response under different loading conditions. Based on these simulation models, a digital twin of the bridge can be established that has a large potential to increase the information content obtained from regular inspections and/or monitoring data. Potential applications are manifold and include virtual sensor readings at positions that are otherwise inaccessible or are too expensive to equip with a sensor system, investigations of the current performance of the structure, e.g. using the actual loading history in a fatigue analysis instead of the design loads, or planning and prioritizing maintenance measures both for a single bridge as well as for a complete sets of similar bridges. However, once the structure is built, the actual state and/or performance of the structure is usually very different from the design due to various reasons. As a consequence, a digital twin based on the initial simulation model developed in the design usually provides only a limited prediction quality on the future state. It is of utmost importance to incrementally improve the design model until the simulation response of the digital twin matches the sensor readings within certain predefined bounds. This poses many challenges that are addressed in the project.

The first one is the intrinsic uncertainty that is related to both the modelling assumptions (e.g. a linear elastic model) within the digital twin as well as the parameters that are used in these models (e.g. Young's modulus). An automated procedure based on Bayesian inference will be established that allows to include uncertain sensor data (both from visual inspections as well as from monitoring systems) into a stochastic updating of model parameters that subsequently enables the computation of probability distributions instead of deterministic values. In particular, it is important to derive a methodology that can handle a constant stream of monitoring data, i.e. a continuous updating procedure. As a consequence, key performance indicators derived from the digital twin as a basis for decision making are equipped with an uncertainty. Based on accurate and optimally placed sensors as well as realistic modelling assumptions, this uncertainty can often be reduced.

As there is no ''correct'' model, finding a good one is a complex process that involves several iterations between model improvement and testing. Visually comparing simulation results from calibrated models with experimental data is challenging. In particular, the identification of model deficiency is limited to a reasonable number of data sets that can be visually analyzed. It is important to identify, a) where the simulation model is wrong and b) what are potential improvements to the model. The identification of sources of a model deficiency in a) is complicated by the large amount of data usually available in monitoring systems, and the fact that modelling assumptions (e.g. related to the boundary conditions such as fixed vs flexible support) often trigger sensor discrepancies at locations that are not coinciding with the location of the model error. The identification of potential improvements in b) is based on machine learning techniques. The idea is to support the engineer developing physics-based models to understand what are relevant physical phenomena that are not correctly represented in the model. This includes spatially variable model parameters via random fields or dictionary approaches for constitutive laws, where the most likely model improvement is selected from a dictionary of parameterized functions.

In order to perform the stochastic analysis for complex structures, and in particular for quasi-realtime applications, computational efficiency is of utmost importance, i.e. the computation time of a single evaluation of the digital twin should be as fast as possible. As a consequence, response surfaces are developed that are trained on the computationally expensive forward model and can then be used in a subsequent evaluation to replace the forward model. This requires goal-oriented error estimates for bounding the approximation error incurred by the response surface. A solution for this task is the development of metamodels based on Gaussian Processes or Physics Informed Neural Networks.

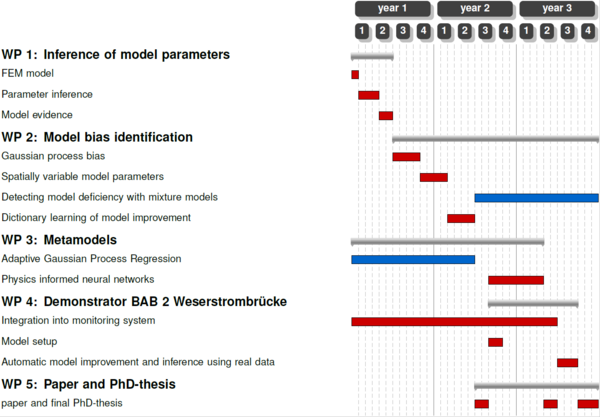

For this project, four work packages are planned as Figure 2 shows.

(Kopie 1)

Project Milestones within the SPP Framework:

- Improved the reliability and quantified the quality of simulation based digital twins for bridges by introducing model form uncertainty into the process of identifying the model parameters from monitoring data. This allows the models to reflect the actual variability seen in field measurements and enables a prediction of KPIs with realistic error bounds as a basis for decision making.

- Developed an adaptive Gaussian process approach that creates fast, reliable approximations of complex bridge models for use in monitoring and assessment. These surrogate models are a precondition for building simulation based digital twins requiring quasi real-time computations with tight precision constraints.

- Used Gaussian Mixture Models and the quantified model form uncertainties to guide potential model improvements for the digital twin. This approach helped identify where the simulation deviated most from real bridge behavior, allowing targeted refinement of the digital twin to better support condition assessment.

- Demonstrated the full calibration process, including model form uncertainty, on a simplified thermal model of the Nibelungenbrücke using real monitoring data. This led to accurate, uncertainty-aware predictions of the thermal behavior, showing how calibrated models can enhance the interpretation of sensor data and support better assessment of current and future bridge's state.

Contact

Publications

Peer-Reviewed Journal Paper

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F.: Model Bias Identification for Bayesian Calibration of Stochastic Digital Twins of Bridges. Applied Stochastic Models in Business and Industry (2024), pp 1-26, https://doi.org/10.1002/asmb.2897

Becks, H., Lippold, L., Winkler, P., Moeller, M., Rohrer, M., Leusmann, T., Anton, D., Sprenger, B., Kähler, P., Rudenko, I., Arcones, D. A., Koutsourelakis, P.-S., Unger, J. F., Weiser, M., Petryna, Y., Schnellenbach-Held, M., Lowke, D., Wessels, H., Lenzen, A.,…Hegger, J. (2024). Neuartige Konzepte für die Zustandsüberwachung und -analyse von Brückenbauwerken – Einblicke in das Forschungsvorhaben SPP100+/Novel Concepts for the Condition Monitoring and Analysis of Bridge Structures – Insights into the SPP100+ Research Project. Bauingenieur, 99(10), 327-338. https://doi.org/10.37544/0005-6650-2024-10-63

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F. (2024): Bias Identification Approaches for Model Updating of Simulation-Based Digital Twins of Bridges. Research and Review Journal of Non-Destructuve Testing, Vol. 2, Art. 2, https://doi.org/10.58286/30524

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F.: Embedded Model Bias Quantification with Measurement Noise for Bayesian Calibration. ArXiv preprint (2024). https://doi.org/10.48550/arXiv.2410.12037

Conferences and other publications

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F.: Evaluation of model bias identification approaches based on Bayesian inference and applications to Digital Twins. In: UNCECOMP 2023 – 5th International Confetence on Uncertainty Quantification in Computational Science and Engineering, 12-14 June 2023, Athens, Greece, pp. 67-81.

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F.: A Bayesian Framework for Simulation-based Digital Twins of Bridges. In: ce/papers (EuroStruct 2023), Vol. 6 (2023), Iss. 5, pp. 734-740.

Villani, P.; Unger, J.F.; Weiser, M.: Adaptive Gaussian Process Regression for Bayesian Inverse Problems. In: Proceedings of the Conference Algoritmy 2024, 15-20 March 2024, Vysoké Tatry, Slovakia, pp. 214-224.

Andrés Arcones, D.; Weiser, M.; Koutsourelakis, P.-S.; Unger, J.F.: Uncertainty Quantification and Model Extension for Digital Twins of Bridges through Model Bias Identification. In: ECCOMAS 2024 – 9th European Congress on Computational Methods in Applied Sciences and Engineering, 3-7 June 2024, Lisbon, Portugal.

Unger, J.F., Andrés Arcones, D.: Uncertainty Quantification and Model Extension for Digital Twins through Model Bias Identification. In; FrontUQ2024, 24-27 September 2024, Braunschweig.

Andrés Arcones, D.; Unger, J.F.: Modell- und Parameterusicherheiten am Beispiel eines digitalen Brückenzwillings. In: Tagusband 11. Jahrestagung des DAfStb mit 63.Forschungskolloquium der BAM. 16.10. – 17-10.2024, Berlin. S. 218-223.

Unger, J.F., Robens-Radermacher, A., Andrés Arcones, D., Saif-Ur-Rehman, S.-U.-R.: Bayesian model updating for predictive digital twins under model form uncertainty. In: DTE-AICOMAS 2025, 17-21 February 2025, Paris, France

Andrés Arcones, D., Weiser, M., Koutsourelakis, P.-S., Unger, J.F.: Incorporating model form uncertainty in digital twins for reliable parameter updating and quantities of interest analysis. In: 95th Annual GAMM Meeting, 7-11 April 2025, Poznan, Poland